Convolutional Neural Network (CNN) for Gender Determination by Morphometry of Eyes

Workflow on building a CNN model in Keras to classify whether a scanned image of an eye is male or female. We utilize flow_from_dataframe since the label is provided on a separate csv file. Data augmentation technique is applied to further improve model performance and generalization. During model fitting, callbacks such as ModelCheckpoint and EarlyStopping are used.

- Introduction

- Download Image Data

- Load Libraries

- Exploratory Data Analysis

- Visualization

- Data Augmentation

- Data Generator

- Building Model & Hyperparameter tuning

- Predict the Testing Set

- Done! 👍

Introduction

The anthropometric analysis of the human face is an important study for performing craniofacial plastic and reconstructive surgeries. Facial anthropometric is affected by various factors such as age, gender, ethnicity, socioeconomic status, environment, and region. Plastic surgeons who repair and reconstruct facial deformities find the anatomical dimensions of the facial structures useful for their surgeries. These dimensions result from the physical or facial appearance of an individual. Factors like culture, personality, ethnic background, age, eye appearance, and symmetry contribute majorly to facial appearance or aesthetics.

Download Image Data

We can use GoogleDriveDownloader from google_drive_downloader library in Python to download the shared files from Google Drive. The file_id of the Google Drive link is 1f7uslI-ZHidriQFZR966_aILjlkgDN76

from google_drive_downloader import GoogleDriveDownloader as gdd

gdd.download_file_from_google_drive(

file_id='1f7uslI-ZHidriQFZR966_aILjlkgDN76',

dest_path='content/eye_gender_data.zip',

unzip=True)

import pandas as pd

# data visualization

import matplotlib.pyplot as plt

# linear algebra and multidimensional arrays

import numpy as np

# deep learning tool

import tensorflow as tf

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D, Flatten, Dense, Dropout

from keras.callbacks import ModelCheckpoint, EarlyStopping

# operating system dependent functionality

import os

# image processing

import cv2

import PIL

def get_image_dim(filename):

image = PIL.Image.open(os.path.join(FOLDER_PATH, "train", filename))

return image.size

FOLDER_PATH = "content/eye_gender_data"

train_df = pd.read_csv(os.path.join(FOLDER_PATH, "Training_set.csv"))

train_df[['width', 'height']] = train_df['filename'].apply(

lambda x: get_image_dim(x)).to_list()

train_df['is_square'] = train_df['width'] == train_df['height']

train_df.head()

Explore the statistics of train_df:

train_df.describe(include='all')

Insight:

- We have 9220 images for the training data

- Count and unique of

filenameare the same, which means no duplicated data, great! - There are two classes of

label: 5058 images are labeled as male and the rest are female -

widthandheight(pixels) of training images are different, we need to resize it into one fixed shape - Unique of

is_squaresis 1, which means all training images are square in shape

fig, axes = plt.subplots(2, 5, figsize=(12, 5))

for ax, (idx, row) in zip(axes.flatten(), train_df.head(10).iterrows()):

image = PIL.Image.open(os.path.join(FOLDER_PATH, "train", row['filename']))

ax.imshow(image)

ax.set_title(row['label'])

ax.axis('off')

Training images have different colors, some are in grayscale but most commonly in RGB. Moreover, they have different skin colors, if we incorporate RGB in our model then we may create a bias when predicting a gender. Thus, we'll convert all images to grayscale before training the model.

Data Augmentation

We apply on-the-fly data augmentation, a technique to expand the training dataset size by creating a modified version of the original image which can improve model performance and the ability to generalize.

- Applied transformation: rotation, shift, shear, zoom, flip

- Rescale the pixel values to be in range 0 and 1

- Reserve 20% of the training data for validation, and the rest 80% for model fitting

train_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rotation_range=20,

width_shift_range=0.01,

height_shift_range=0.01,

shear_range=0.01,

zoom_range=0.01,

horizontal_flip=True,

rescale=1./255,

validation_split=0.2,

fill_mode='nearest')

# no data augmentation for validation data

val_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1./255,

validation_split=0.2)

IMAGE_SIZE = (100, 100)

BATCH_SIZE = 128

SEED_NUMBER = 42 # for reproducible result

gen_args = dict(

dataframe=train_df,

directory=os.path.join(FOLDER_PATH, "train"),

x_col='filename',

y_col='label',

target_size=IMAGE_SIZE,

class_mode="binary",

color_mode="grayscale", # convert RGB to grayscale color channel

batch_size=BATCH_SIZE,

shuffle=True,

seed=SEED_NUMBER)

# flow from dataframe

train_ds = train_datagen.flow_from_dataframe(subset='training', **gen_args)

val_ds = val_datagen.flow_from_dataframe(subset='validation', **gen_args)

We have 7376 images for model fitting and the rest 1844 images for validation.

Building Model & Hyperparameter tuning

Now we are finally ready, and we can train the model. CNN is used as an automatic feature extractor from the images. It effectively uses the adjacent pixel to downsample the image and then use a prediction (fully-connected) layer to solve the classification problem.

model = Sequential(

[

# First convolutional layer

Conv2D(

filters=64,

kernel_size=3,

strides=1,

padding="same",

activation="relu",

input_shape=IMAGE_SIZE + (1, )),

# First pooling layer

MaxPooling2D(

pool_size=2,

strides=2),

# Second convolutional layer

Conv2D(

filters=32,

kernel_size=3,

strides=1,

padding="same",

activation="relu"),

# Second pooling layer

MaxPooling2D(

pool_size=2,

strides=2),

# Third convolutional layer

Conv2D(

filters=16,

kernel_size=3,

strides=1,

padding="same",

activation="relu"),

# Third pooling layer

MaxPooling2D(

pool_size=2,

strides=2),

# Flattening

Flatten(),

# Fully-connected layer

Dense(512, activation="relu"),

Dropout(rate=0.2),

Dense(128, activation="relu"),

Dropout(rate=0.2),

Dense(32, activation="relu"),

Dropout(rate=0.2),

Dense(1, activation="sigmoid")

]

)

model.summary()

model.compile(

optimizer="adam",

loss="binary_crossentropy",

metrics=["accuracy"])

STEPS = 500

checkpoint = ModelCheckpoint("model.hdf5",

verbose=1,

save_best_only=True,

monitor="val_accuracy")

es_callback = EarlyStopping(monitor='val_accuracy',

patience=5,

restore_best_weights=True,

verbose=1)

model.fit(

x=train_ds,

validation_data=val_ds,

steps_per_epoch=STEPS,

validation_steps=STEPS,

callbacks=[checkpoint, es_callback],

epochs=100)

fig, ax = plt.subplots(1, 2, figsize=(10, 4))

history_df = pd.DataFrame(model.history.history)

history_df[['loss', 'val_loss']].plot(kind='line', ax=ax[0])

history_df[['accuracy', 'val_accuracy']].plot(kind='line', ax=ax[1])

test_df = pd.read_csv(os.path.join(FOLDER_PATH, "Testing_set.csv"))

test_df.head()

test_datagen = tf.keras.preprocessing.image.ImageDataGenerator(

rescale=1./255)

test_ds = test_datagen.flow_from_dataframe(

dataframe=test_df,

directory=os.path.join(FOLDER_PATH, "test"),

x_col='filename',

target_size=IMAGE_SIZE,

class_mode=None,

color_mode="grayscale", # convert RGB to grayscale color channel

shuffle=False

)

test_ds.reset()

y_pred = (model.predict(test_ds, steps=len(test_ds)) > 0.5).astype("int32").flatten()

y_pred

class_mapping = {v:k for k, v in train_ds.class_indices.items()}

class_mapping

predictions = np.vectorize(class_mapping.get)(y_pred)

predictions

res = pd.DataFrame({'filename': test_ds.filenames, 'label': predictions})

res.to_csv("submission.csv", index=False)

Run the cell code below to download the submission.csv from Google Colab to local computer.

from google.colab import files

files.download('submission.csv')

Done! 👍

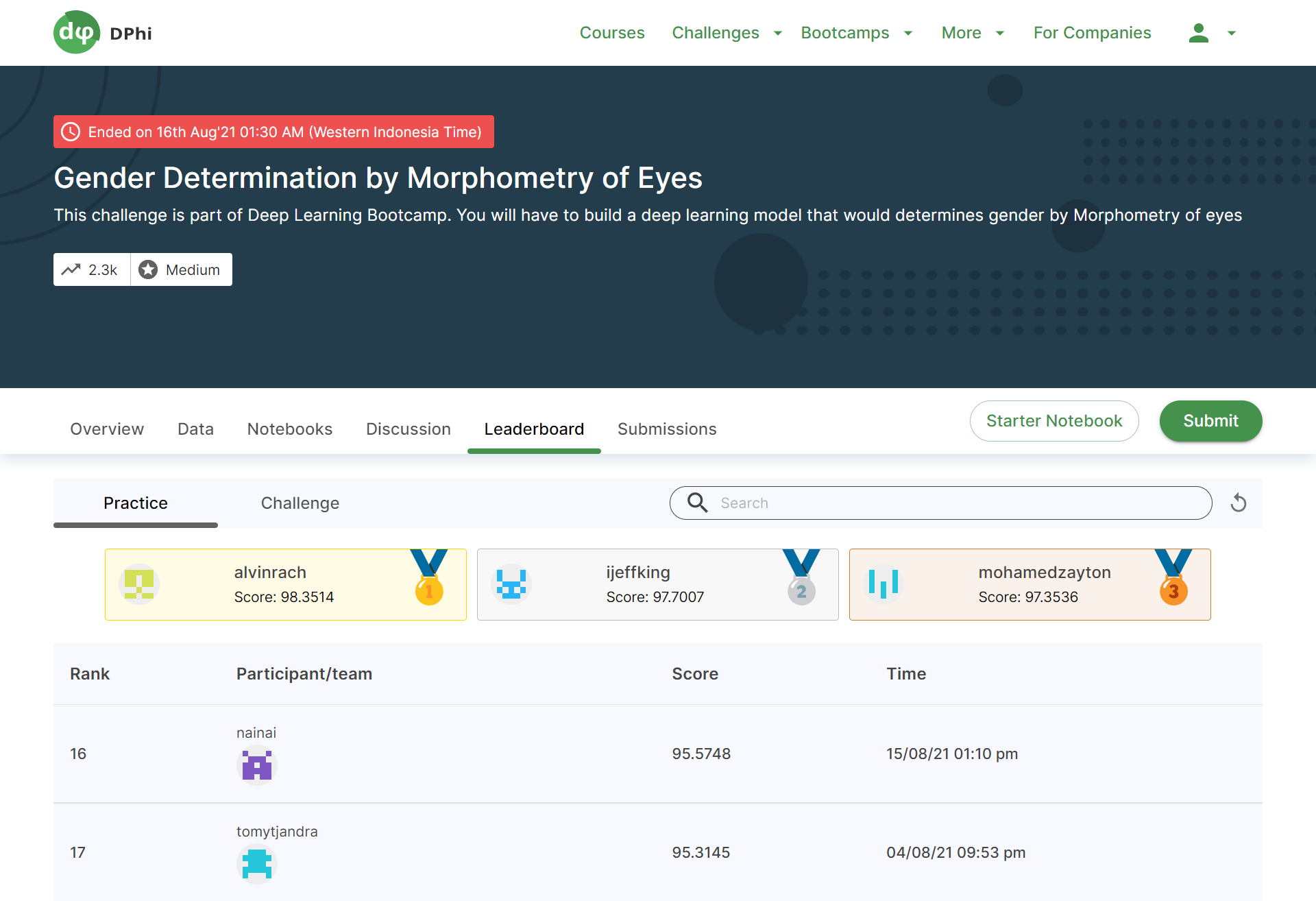

We are all set to make a submission. Let's head to the challenge page to make the submission.